This item has been moved to my therapy blog.

Monthly Archives: February 2013

A Beginner’s Guide to Using SAS 9.2

Life introduced me to the statistical data processing program called SAS (originally an acronym for Statistical Analysis System). I wondered what would be the best way for a non-expert to approach it.

The short answer seemed to be that the best way for a non-expert to approach SAS was to start with Excel — and stay there. SAS was not designed to be user-friendly. It wasn’t horrible. It just needed to open with a greeting that said, “Thank you for choosing to spend your excess free time learning how to use our software. We hope you enjoy the experience.” And, really, I know I shouldn’t be too snide about it. People have to learn programming languages all the time. It’s just that a spreadsheet user can be spoiled into thinking that this really doesn’t have to be that hard. So this is a beginner’s guide, because I was a beginner. To emphasize, this is no guide to statistical interpretation. This was just a bare-bones attempt to make the stupid program work, brought to you by someone who has unkind thoughts toward standard forms of statistics education in the first place.

The first struggle was to get data into SAS. Originally, I had the writeup of that ordeal in this post, but it became such a megillah that I finally moved it to another post. Some of the following remarks reflect instances when I had to give up on importing the Excel data and instead just entered it manually (or, if the system was cooperating, copied and pasted it) and then proceeded with SAS code using the CARDS command.

I went on to learn other things about the windows that were now open in the SAS interface. The View menu pick would give me the option of opening various SAS windows, assuming they weren’t open already. What I seemed to need was the Log window, to tell me what had failed and to provide cryptic clues that I could Google to find out what had actually happened; the Output window, to show me nothing at all until, at long last, I did manage to do something right; and the Editor window, where I would be entering SAS codes for the purpose of producing error messages in the Log window. I was pleased to discover that a right-click > Edit > Clear All, in the Log, would remove all evidence of my SAS failings.

At one point, after importing my data into SAS, I got an error message that said, “Variable X in list does not match type prescribed for this list,” where X was the name of the variable. One part of the problem was that I was trying to import names. So, for instance, the first row of my spreadsheet might have had the name of John and his height and weight. SAS was not especially interested in John. In the simplest approach, it just wanted numbers. So in the process of naming variables (using VAR variable1 variable2), where the variable names could be the same as the names I had assigned at the tops of the columns in Excel, it seemed that I just needed to ignore the first column, labeled “Names,” in which I had the subjects’ actual names as distinct from numerical data about them. There were ways to work with words in SAS, but I didn’t get into that.

Correlation and Scatterplot

The first analysis I needed to do was a Pearson correlation. I found exactly what I was looking for on a SAS support page: a simple, step-by-step guide to run a Pearson correlation analysis. To run the analysis, it said, I had to choose Tasks > Multivariate > Correlations. The only thing I lacked was a Tasks option. So since I couldn’t follow that advice, I eventually figured out that, as an alternative, I could run these commands (making sure I had selected the Editor, not some other window, before clicking the running-man Run icon):

proc freq data = Q1;

table IQ Arithmetic;

run;

proc corr;

var IQ Arithmetic;

run;

When I ran that, there was good news, and there was bad news. The good news is that the second part ran and gave me something in the Output window. The bad news is that the first part produced “ERROR: No data set open to look up variables.” (This was one of many instances when references to the imported Excel spreadsheet failed; see the other post, linked above, for more details.) I did discover, at this point, that it would work, at least sometimes, to refer to the data file (Q1) according to its location, like sasuser.Q1.

Now I needed to create a scatter plot for my correlated variables. A UCLA webpage persuaded me that, for this, all I needed to do was to type some commands into the editor:

TITLE 'Scatterplot - Two Variables';

PROC GPLOT DATA=Q1;

PLOT IQ*Arithmetic ;

RUN;

IQ and Arithmetic were the two variables being correlated, and Q1 was my data file. This didn’t run at all. I tried this:

proc freq data = sasdata.Q1;

table IQ Arithmetic;

run;

and that worked! I had a table in the Output window. I tried again with the UCLA advice, modified thus:

TITLE 'Scatterplot - Two Variables';

PROC GPLOT DATA=sasdata.Q1;

PLOT IQ*Arithmetic ;

RUN;

That worked: I had a scatterplot showing IQ on the Y axis and Arithmetic on the X axis. (Would have expected X to come first.) Now I needed to dress it up. For one thing, the default plus symbols (” + “) on the plot were not very visible. Another UCLA webpage led me to think that a dot might be more visible, depending on size. Following guidance from a York University webpage, I tried sticking this additional line of code in there:

SYMBOL1 V=DOT C=RED H=3 ;

That definitely worked. Big red polka dots. My graph had chickenpox. Now I wanted to change the size of my graph, and make the numbers on the X and Y axes bigger. A SAS webpage, combined with another on fonts and one on graph axes, led me to try this:

GOPTIONS hsize=15cm vsize=15cm ftext=Arial height=2;

That worked too. I put these lines in the Editor window, just after the PROC GPLOT line shown above. I could have tried to figure out how to output the graph as a PNG or JPG file, but instead I just did a screen shot (with the keyboard’s PrtSc key), pasted it into IrfanView, dragged a box to indicate what I wanted to crop (using the left mouse button) and then hit Ctrl-Y to crop, and did a Ctrl-C Ctrl-V to paste it into Microsoft Word.

Regression and Variables

The next time I was in SAS, I was there to do something with a regression. (Some of these examples are borrowed or adapted from Ronna Turner.) I used these commands:

proc plot data = x1;

plot Height*Exam;

run;

proc reg simple;

model Exam = Height / p stb;

run;

The first set of three lines began with a command to do a plot, using my imported X1 file as my data source. The objective of those first three lines was to get a scatterplot that I could eyeball to check whether my data met key regression assumptions (including heteroscedasticity, normality, and linearity). The second set was to output various values that I was learning to interpret.

At this point, still trying to get the Excel import process to work, I posted a question on that, to make sure that everyone would know how confused I was. Eventually I gave up, for purposes of this regression exercise, and just used the CARDS command and manual data entry. I wound up with this in the Editor window:

data example2 ;

input Exercise Aggress9 Aggress10 Aggress11 Aggress12 ;

PhysicalAggression = (Aggress9 + Aggress10 + Aggress11 + Aggress12) / 4 ;

cards ;

120 4 3 5 5

0 . . . .

0 1 1 2 3

120 2 2 3 3

220 1 1 2 4

;

proc reg simple ;

model PhysicalAggression = Exercise / stb ;

run ;

So, to explain that, it seemed I was setting up a temporary database called example2; I was going to be inputting data into it for five variables (Exercise through Aggress12); I was creating a new variable called PhysicalAggression, whose value was the average of Aggress9 through Aggress12 (which I think were four different behaviors that someone believed were important components of physical aggression); I was telling SAS to get ready for some punchcards (i.e., the old way of putting data into computers) (I think “datalines” might have worked instead of “cards”); I was providing a handful of values for each of those Exercise and Aggress variables; and I was running a simple regression procedure. Although it was not entirely clear to me, it seemed that the MODEL statement was supposed to tell SAS which dependent and independent variables I was using and, optionally, would allow options like the STB option shown here. And this worked.

t Tests

A couple weeks later, I came back to SAS to run some t test calculations. I was still having problems getting SAS to see my Excel file, so once again I used manual data entry. I started with an independent samples t test. For that, I used these commands:

data dataA ;

input tutor skills ;

cards ;

1 54

1 40

1 68

1 54

1 55

1 35

2 50

2 48

2 35

2 65

2 70

;

proc ttest ;

class tutor ;

var skills ;

run;

proc sort ;

by tutor ;

run ;

proc univariate normal plot ;

by tutor ;

var skills ;

run ;

These commands compared Group 1, containing six values (54, 40, . . .) against Group 2, containing five values (50, 48, . . .). The temporary database was called dataA. Group 1 contained the independent variable (i.e., the identity of the tutor), and Group 2 contained the dependent variable (i.e., the skills learned). The PROC commands (ttest, sort, and univariate) thus prioritized (or classified according to) the tutor variable. We have here the actual TTEST procedure, plus a couple of procedures to test underlying assumptions of the t test. I wasn’t sure whether it was necessary to have those multiple “run” statements in the mix, but that seemed to be the safer approach.

The next mission was to try a t test for dependent samples. I wasn’t sure if I could use the same data input format as above (e.g., “1 54” and “1 40”). It seemed the preferred approach was to list both of the paired scores on the same line. So the commands I used for a dependent samples t test were as follows:

data dataB ;

input TestPre TestPost ;

D = TestPost - TestPre ;

cards ;

300 320

350 340

240 260

210 210

275 280

;

proc means n mean std stderr t probt ;

var D ;

run ;

proc univariate normal plot ;

var D ;

run ;

The “D” value was just the difference between the pretest and posttest scores. Because it was a dependent t test, these procedures left out the test for homogeneity of variances. The “t” option for the PROC MEANS procedure was the command for the dependent t test.

One-Way ANOVA

For my final trick, I used SAS to do ANOVA calculations. Things began pretty much the same as before (using three data points in each of three separate groups, in this case, with group numbers on the left column of manually entered items):

data anova1 ;

input groups1 values1 ;

cards ;

1 4

1 3

1 5

2 6

2 5

2 3

3 8

3 9

3 8

;

proc sort ;

by groups1 ;

run ;

proc univariate normal ;

by groups1 ;

var values1 ;

run ;

proc glm ;

class groups1 ;

model values1 = groups1 ;

means groups1 / hovtest = bf tukey alpha = .10 ;

run ;

Some parts of this were already explained above. The SORT procedure would put the data in order, if it weren’t already in order by independent variable (i.e., group); in this case, the SORT procedure was unnecessary, since the data were already sorted by groups 1, 2, and 3. The NORMAL option supplied a test for normality, which was an underlying assumption of ANOVA. “GLM” was short for “general linear model,” “group1” was my name for the independent variable — namely, the group from which the observed value came, and “values1” was my name for that observed value (i.e., the dependent variable). The MEANS statement would give me the smorgasbord of descriptive statistics; the HOVTEST option would test for homogeneity of variance, another ANOVA assumption. (“Bf” specified the Brown-Forsythe homogenity test.)

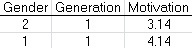

A few weeks later, I returned to ANOVA for a subsequent project. This time, I had two independent variables — gender and generation — and seven dependent variables, comprising the responses to seven questions on a questionnaire. For each participant, I had to average the seven responses into one outcome variable, which I called “motivation.” The dataset had some excess records. I excluded those, and also did the averaging, in Excel, before importing into SAS. The first three rows of my Excel file thus looked like this:

I didn’t have the same number of people in each group, so that seemed to qualify as an unbalanced ANOVA. It looked like GLM was the right procedure for that. This would apparently have been best set up as a two-way ANOVA, but we hadn’t covered that; we were just supposed to do it as two one-way ANOVAs. The following code shows what I did with gender as the independent variable; I used the same code again, with generation as the independent variable, by simply replacing each occurrence of “gender” with “generation.”

proc sort data=Q1 ;

by gender ;

run ;

proc univariate normal data=Q1 ;

by gender ;

var motivation ;

run ;

proc glm data=Q1 ;

class gender ;

model motivation = gender ;

means gender / hovtest = bf tukey alpha = .10 ;

run ;

I had noticed that the SAS log said that it had imported 243 observations. There were actually 244 rows in the spreadsheet. Leaving out the first row (containing “Gender” and other variable names) didn’t change this problem; in that case it said 242 observations when there were actually 243 rows. I wondered if SAS was skipping a row for some reason. I ran it again, but this time I copied and pasted the data into the code, with corresponding commands. So before the start of the code just shown (beginning with “proc sort data=Q1”), I added these commands:

data Q1 ;

input gender generation motivation ;

cards ;

2 1 3.14

1 1 4.14

[rest of data, copied and pasted from Excel]

;

I ran the code shown above, with those additions. The log said there were 243 observations, which was correct. The means were identical between these two approaches, to seven decimal places, for both gender = 1 (which, in this case, was male) and gender = 2. The approaches appeared to achieve the same thing; it was just a question of whether one wished to copy and paste raw data or, instead, to refer to an imported Excel spreadsheet.

I wondered whether the Excel averaging that I had done, across those seven dependent variables, was the same as the SAS averaging. It seemed obvious that the simple process of averaging must surely produce identical results; nonetheless, I thought I would check. To do the averaging in SAS, I replaced the lines just shown with these:

data Q1 ;

input gender generation dv1 dv2 dv3 dv4 dv5 dv6 dv7 ;

motivation = (dv1 + dv2 + dv3 + dv4 + dv5 + dv6 + dv7)/7 ;

cards ;

2 1 3 4 4 2 3 2 4

1 1 3 5 5 3 4 4 5

[rest of data, copied and pasted from Excel]

;

Note that, in this case, the copied and pasted raw data consisted of the two independent variables (only one of which was in play at any given point, since this was a one-way ANOVA) and then the seven dependent variables that I had averaged together, in Excel, to produce the third (“motivation”) value shown above. (Due to balky software, sometimes I had to do my copying and pasting via right-click (context) menu rather than Ctrl-C.)

At any rate, this ran without errors. Oddly, the SAS log said there were 243 observations and ten (not nine) variables. The means of the motivation variable differed, for both gender = 1 and gender = 2, from the means calculated from the motivation variable calculated in Excel, presumably due to differences in rounding; however, the differences were very slight (e.g., 3.51819277 vs. 3.51807229). For practical purposes, it seemed that the approach of organizing the data in Excel before importing into SAS (and thus reducing the amount of coding in SAS) was workable. Hence, it seemed that the additional lines shown above were unnecessary; the set shown earlier (beginning with “proc sort data=Q1”) was sufficient.

At this point, as noted above, I re-ran with “generation” rather than “gender” as the independent variable. Although I was once again using the abbreviated code shown above, I saw that the log said I had ten variables, not three. This seemed to indicate that SAS was still using the many raw values that I had just read into the system using the CARDS command shown above — not the Excel file for which that abbreviated code was designed. To fix that, I re-imported the Excel file, with its mere three columns of data, and in the import process I told SAS to go ahead and overwrite the prior Q1. The log said, “ERROR: Import cancelled. The dataset WORK.Q1 is being used and cannot be replaced.” I killed SAS and tried again. This time, the import worked.

I wondered whether SAS was necessary for these processes. I also wanted to verify that this last SAS calculation had run correctly. Accordingly, I looked for advice on doing one-way ANOVA in Excel 2010. An eHow article said that I needed to begin with File > Options > Add-Ins > Go > Analysis ToolPak. With that add-in installed, the next step was to go to Data > Analysis > Data Analysis > Anova: Single Factor. I highlighted everything, including headers, and thus clicked the “Labels in First Row” box. This gave me, of course, a disappointingly small set of data, when compared to the pages that SAS had output in response to the several commands shown above. Apparently I would have to run a variety of Excel procedures to obtain similar output there, and would also have to learn how to interpret them. So I was reminded of the upside of coding: while the task itself may be less user-friendly than various measures in Excel, you can produce a lot of information with just a few commands in code.

I decided to take a closer look at what SAS was doing, just to be sure that my code was working right. I was not too sure what SAS kept in memory, so I killed SAS and started it afresh. I used the lines of code shown above (beginning with “proc sort data=Q1”). There was no INPUT command or other assignment of variables in those lines of code. Then I imported an Excel spreadsheet containing 83 rows of data plus one header row. The header row contained the same titles as above: gender, generation, and motivation. That header row would be the only way that SAS would know what those lines of code were talking about, when they referred to “by generation” and “var motivation” and so forth. Was SAS going to draw its variable definitions from those column headings? The code ran without errors. The output specified values for n and the mean, for each of three generational subgroups within the imported data. I calculated those same values in Excel. They matched up. It appeared that SAS had obtained its variable definitions from the Excel column headings, and had produced the same n and mean values as Excel for those generational subgroups. It did seem, however, that I was best advised to kill and restart SAS after running an ANOVA for one subgroup, because in this test it kept using those same 83 rows of data when I thought I had overwritten those with another imported Excel spreadsheet containing 160 rows. Possibly I had overlooked a warning in the log.

That seems like a rather flat note on which to end this soliloquy, but you know how it is: the final exam comes, and that’s the end. Until next time.